Dermatologists have a tough job. When a patient gets a skin exam, their dermatologist looks at the entire body and finds the strange, suspicious outliers and determines whether or not they should be biopsied. They also have to be on the lookout for changing moles, which is difficult without a visual reference of the patient. Primary care physicians (PCPs) have it even harder because they don’t spend the majority of their day inspecting skin and looking for dangerous lesions.

At SkinIO, we empathize. We spend a lot of time thinking about how to take some of the pressure off of doctors and provide tools to allow them to be more efficient. In an era full of articles claiming to beat doctors at diagnosing lesions, interpreting CAT scans, and predicting heart attacks, it can seem easy to use machine learning to provide medical care. But these articles cover a small amount of what a doctor does, and can’t always be used in the real world.

That doesn’t mean that machine learning can’t improve care, but it does mean that AI practitioners should try to supplement the experience and intuition of doctors rather than replace it. That’s our motivation at SkinIO, and we’re lucky to have relationships with dermatologists who are eager for tools to make their time with patients more intentional and productive. This is the first in a series of articles about how we accomplish that goal with image processing and machine learning.

How We Help Doctors Monitor Skin

One of the biggest difficulties in dermatology is the lack of a baseline. Dermatologists are excellent at locating moles that stand out from the rest, but it can be difficult to determine change from a single visual exam every year. By providing photographic records of a patient’s skin every 6 months or year, dermatologists can locate new or changing lesions.

However, photographic evidence can be difficult to use in office, especially if the patient has a large number of features on the skin. Looking for small differences over a patient’s entire body can consume a lot of a doctor’s valuable time.

Imagine playing this game, but everything is moles and there are hundreds of them. Image from www.iqpuzzlez.com

That’s where we come in. Here are just a few examples of the benefits that SkinIO provides:

By locating moles and lesions on the body, dermatologists can easily attach dermoscopic or close-up photographs to the exact features on the skin, facilitating future follow-ups and comparison.

By matching the moles and lesions, we can search for new spots and alert doctors to a spot that wasn’t located on the previous picture. As 71% of melanomas come from a new spot, this can help dermatologists focus on the needle in the haystack.

After matching spots on the skin, we can detect changes in their properties. Sometimes the changes are small and hard to catch by a visual inspection. By identifying changes algorithmically, suspicious moles can be brought to the attention of a dermatologist earlier.

How We Analyze Images

So how do we do it? Below is a figure illustrating the process at SkinIO.

From the patient photograph to a breakdown of new, changed, and suspicious spots.

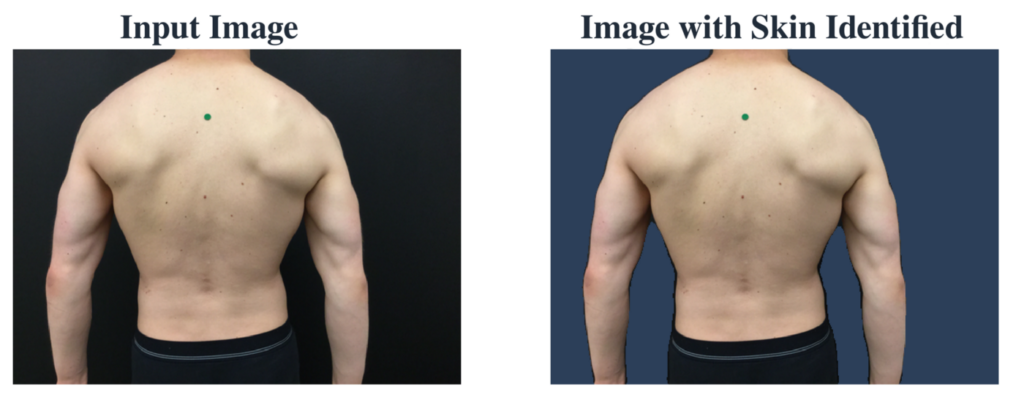

The first step is isolating the skin. This is currently done by identifying the color distribution of the person, and filtering out the background. We’re in the process of switching to a new deep learning approach. This new approach will allow us to work in almost any environment without a backdrop.

A patient photo before and after the skin has been isolated. The background is ignored during later processing.

Once we’ve identified the skin, we search for lesions on the body. We do this with a two-step process. In the first step, we identify a large set of candidate detections using a blob detector. We optimize this part of the process to locate as many lesions as possible between 1.5 mm and 1.5 cm, so this process produces a large amount of false positives. To reduce the number of false positives, we send the set of candidates through a deep learning classifier trained on internal data. This follow-up step reduces the number of false positives by 84%. These two steps identify the set of all the lesions that will be tracked over time. Dermatologists can interact with each of the identified lesions to attach images or notes to patients.

Each of these detections can be interacted with by a dermatologist to store information or communicate with a patient.

We then calculate properties of the lesion. This process is harder than it might seem. Calculating properties requires identifying the boundary of the lesion, as the lesion can be small and lack well-defined edges. We currently identify this boundary with a U-Net, a deep learning segmentation model. After the boundary has been identified, we extract properties like the dimensions, shape, and color profile of the lesion. This covers the first four parts of the ABCDEs of melanoma (Asymmetry, Border, Color, and Diameter).

It can be difficult to isolate the exact boundary of a lesion, particularly when they are small and low-resolution. Deep learning models can be more resilient to gradual boundaries and faint lesions.

If this is the first set of photos taken with SkinIO, the process stops here. If it’s a follow-up set, the lesions from the two photographs will then be matched against each other. This is primarily motivated by geometry, using a matching algorithm called the thin-plate spline robust point matching (TPS-RPM) algorithm. This method tends to mismatch when many lesions are very close together, especially when the patient’s body has moved significantly. To solve this, we add information about the appearance of the lesion to clear up the confusion.

By matching lesions, we improve the process of comparing between photos. By clicking on a lesion in the current photo, the previous photo will pan to the matching lesion. We also identify lesions that don’t have a possible partner in the previous photo, suggesting them as new to the doctor. In addition, Matching provides us the ability to detect changes in the calculated properties of the lesions. Our results pull their attention to lesions that are most likely to be new or changing, and helps them do visual comparisons of the lesions across time in the iOS app. Dermoscopic and close-up images are also propagated across matches, making it easier to inspect lesions that the dermatologist has identified as suspicious.

The tools made possible by the image processing described here can dramatically improve the efficiency of skin exams done in a dermatologist and primary care office. By matching across images, we improve the usefulness of the photographic baseline and make organizing dermoscopic images easy. By detecting new and changed, we help draw attention to lesions that could be easily missed in a sea of freckles or moles.

At SkinIO, we want to empower doctors to do what they do best, giving them tools to make their job faster, easier, and more accurate.